GPU Process Experiment Results

Table of Contents

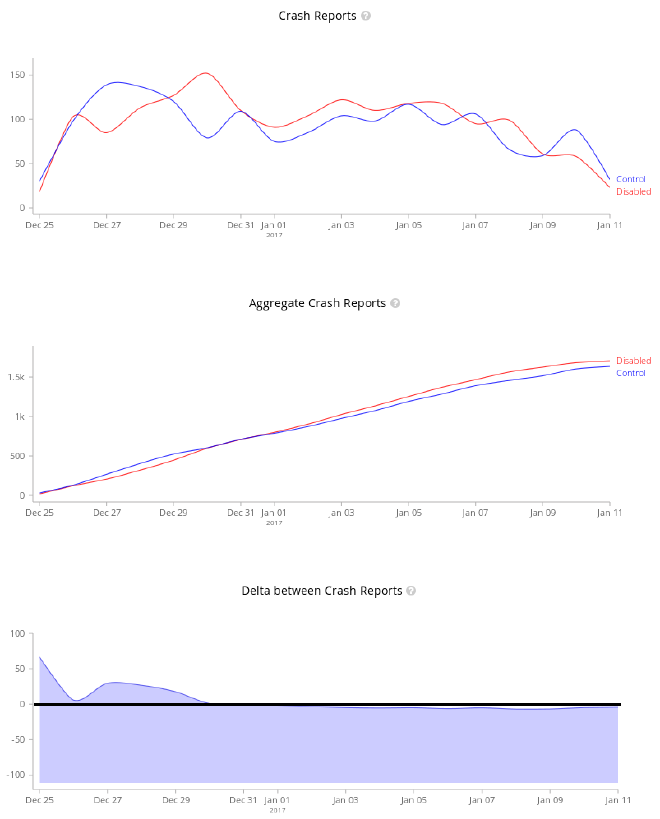

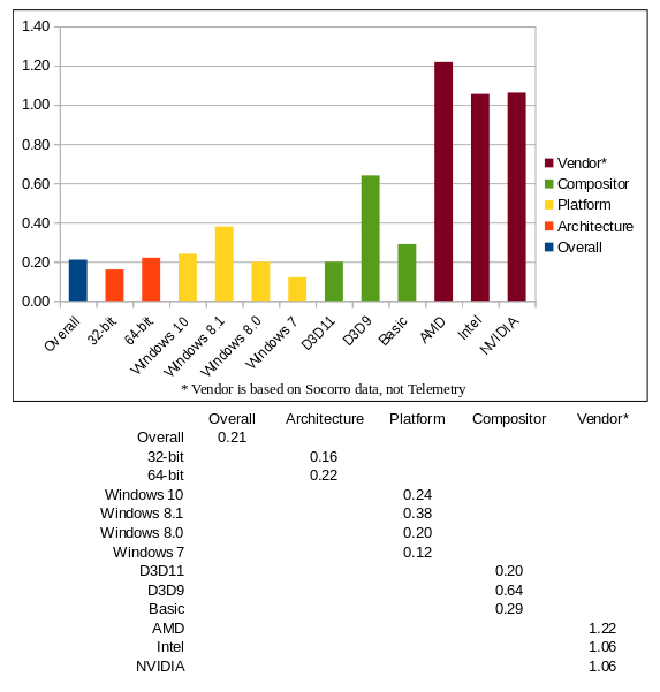

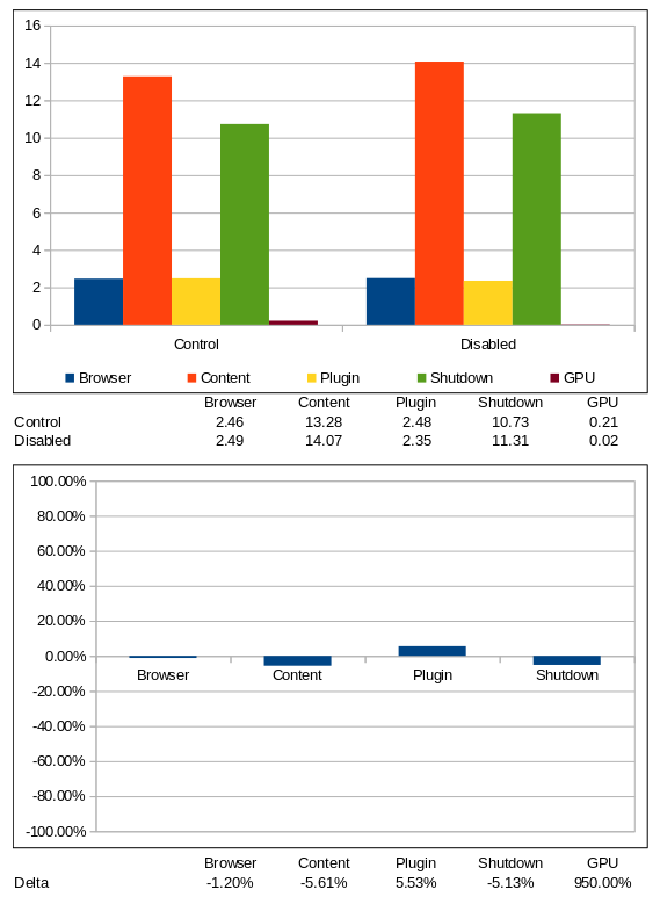

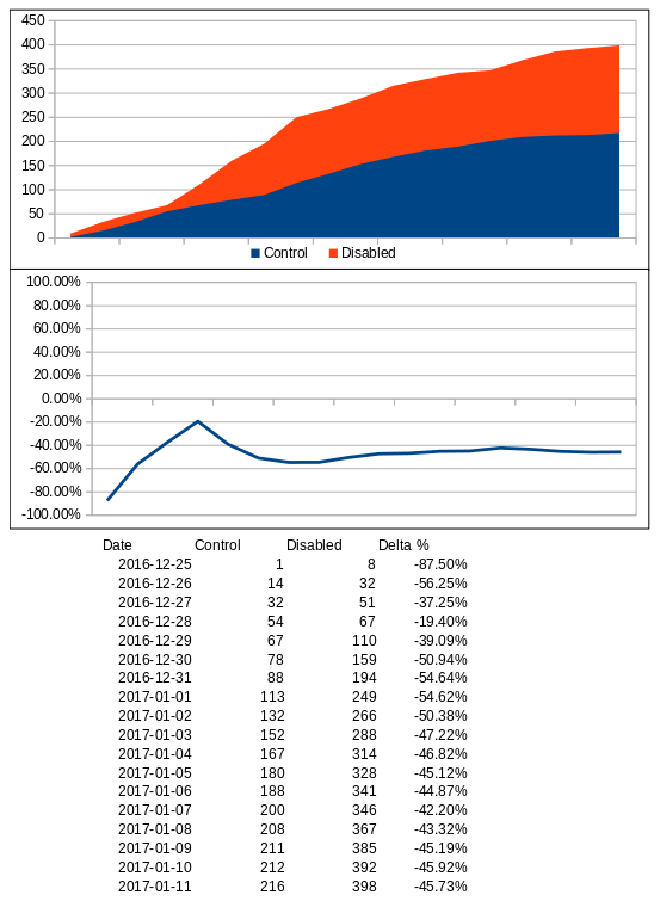

Update: It was pointed out that it was hard to know what the charts measure specifically due to unlabeled axes. For all charts measuring crashes (ie. not the percentage charts) the Y-Axis represents crash rate, where crash rate for Telemetry data is defined as “crashes per 1000 hours of usage” and crash rate for Socorro data is defined as “crashes per number of unique installations”. The latter really only applies to the Breakdown by Vendor chart and the vendor bars in the Breakdown of GPU Process Crashes chart. The x-axis for all charts is the date.

GPU Process has landed with 4% fewer crash reports overall!

- 1.2% fewer Browser crashes

- 5.6% fewer Content crashes

- 5.1% fewer Shutdown crashes

- 5.5% greater Plugin crashes

- 45% fewer GPU driver crash reports!

Thanks to David Anderson, Ryan Hunt, George Wright, Felipe Gomes, Jukka Jylänki

Several months ago Mozilla’s head of Platform Engineering, David Bryant, wrote a post on Medium detailing a project named Quantum. Built on the foundation of quality and stability we’d built up over the previous year, and using some components of what we learned through Servo, this project seeks to enable developers to “tap into the full power of the underlying device”. As David points out, “it’s now commonplace for devices to incorporate one or more high-performance GPUs”.

It may surprise you to learn that one of these components has already landed in Nightly and has been there for several weeks: GPU Process. Without going in to too much technical detail this basically adds a separate Firefox process set aside exclusively for things we want the GPU (graphics processing unit) to handle.

I started doing quality assurance with Mozilla in 2007 and have seen a lot of pretty bad bugs over the years. From subtle rendering issues to more aggressive issues such as the screen going completely black, forcing the user to restart their computer. Even something as innocuous as playing a game with Firefox running in the background was enough to create a less than desirable situation.

Unfortunately many of these issues stem from interactions with drivers which are out of our control. Especially in cases where users are stuck with older, unmaintained drivers. A lot of the time our only option is to blacklist the device and/or driver. This forces users down the software rendering path which often results in a sluggish experience for some content, or missing out altogether on higher-end user experiences, all in an effort to at least stop them from crashing.

While the GPU Process won’t in and of itself prevent these types of bugs, it should enable Firefox to handle these situations much more gracefully. If you’re on Nightly today and you’re using a system that qualifies (currently Windows 7 SP1 or later, a D3D9 capable graphics card, and whitelisted for using multi-process Firefox aka e10s), you’ve probably had GPU Process running for several weeks and didn’t even notice. If you want to check for yourself it can be found in the Graphics section of the about:support page. To try it out do something that normally requires the video card (high quality video, WebGL game, etc) and click the Terminate GPU Process button — you may experience a slight delay but Firefox should recover and continue without crashing.

Before I go any further I would like to thank David Anderson, Ryan Hunt, and George Wright for doing the development work to get GPU Process working. I also want to thank Felipe Gomes and Jukka Jylänki for helping me work through some bugs in the experimentation mechanism so that I could get this experiment up and running in time.

The Experiment #

As a first milestone for GPU Process we wanted to make sure it did not introduce a serious regression in stability and so I unleashed an experiment on the Nightly channel. For two weeks following Christmas, half of the users who had GPU Process enabled on Nightly were reverted to the old, one browser + one content process model. The purpose of this experiment was to measure the anonymous stability data we receive through Telemetry and Socorro, comparing this data between the two user groups. The expectation was that the stability numbers would be similar between the two groups and the hope was that GPU Process actually netted some stability improvements.

Now that the experiment has wrapped up I’d like to share the findings. Before we dig in I would like to explain a key distinction between Telemetry and Socorro data. While we get more detailed data through Socorro (crash signatures, graphics card information, etc), the data relies heavily on users clicking the Report button when a crash occurs; no reports = no data. As a result Socorro is not always a true representation of the entire user population. On the other hand, Telemetry gives us a much more accurate representation of the user population since data is submitted automatically (Nightly uses an opt-out model for Telemetry). However we don’t get as much detail, for example we know how many crashes users are experiencing but not necessarily which crashes they happen to be hitting.

I refer to both data sets in this report as they are each valuable on their own but also for checking assumptions based on a single source of data. I’ve included links to the data I used at the end of this post.

As a note, I will the terminology “control” to refer to those in the experiment who were part of the control group (ie. users with GPU Process enabled) and “disabled” to refer to those in the experiment who were part of the test group (ie. users with GPU Process disabled). Each group represents a few thousand Nightly users.

Daily Trend #

To start with I’d like to present the daily trend data. This data comes from Socorro and is graphed on my own server using the MetricsGraphics.js framework. As you can see, day to day data from Socorro can be quite noisy. However when we look at the trend over time we can see that overall the control group reported roughly 4% fewer crashes than those with GPU Process disabled.

Breakdown of GPU Process Crashes #

Crashes in the GPU Process itself compare favourably across the board, well below 1.0 crashes per 1,000 hours of usage, and much less than crash rates we see from other Firefox processes (I’ll get into this more below). The following chart is very helpful in illustrating where our challenges might lie and may well inform roll-out plans in the future. It’s clear to see that Windows 8.1 and AMD hardware are the worst of the bunch while Windows 7 and Intel is the best.

Breakdown by Process Type #

Of course, the point of GPU Process is not just to see how stable the process is itself but also to see what impact it has on crashes in other processes. Here we can see that stability in other processes is improved almost universally by 5%, except for plugin crashes which are up by 5%.

GPU Driver Crashes #

One of the areas we expected to see the biggest wins was in GPU driver crashes. The theory is that driver crashes would move to the GPU Process and no longer take down the entire browser. The user experience of drivers crashing in the GPU Process still needs to be vetted but there does appear to be a noticeable impact with driver crash reports being reduced overall by 45%.

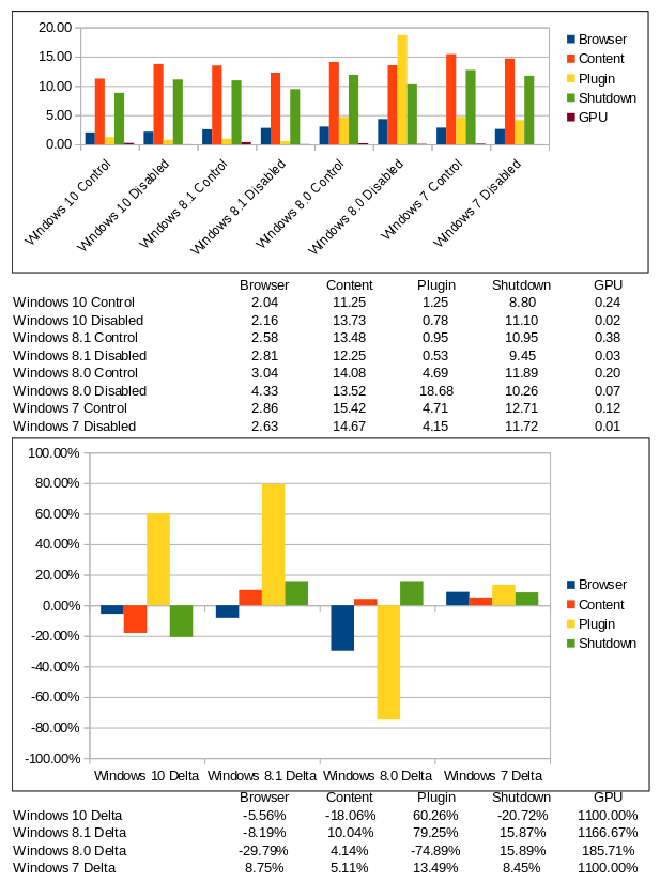

Breakdown by Platform #

Now we dig in deeper to see how GPU Process impacts stability on a platform level. Windows 8.0 with GPU Process disabled is the worst especially when Plugin crashes are factored in while Windows 10 with GPU Process enabled seems to be quite favourable overall.

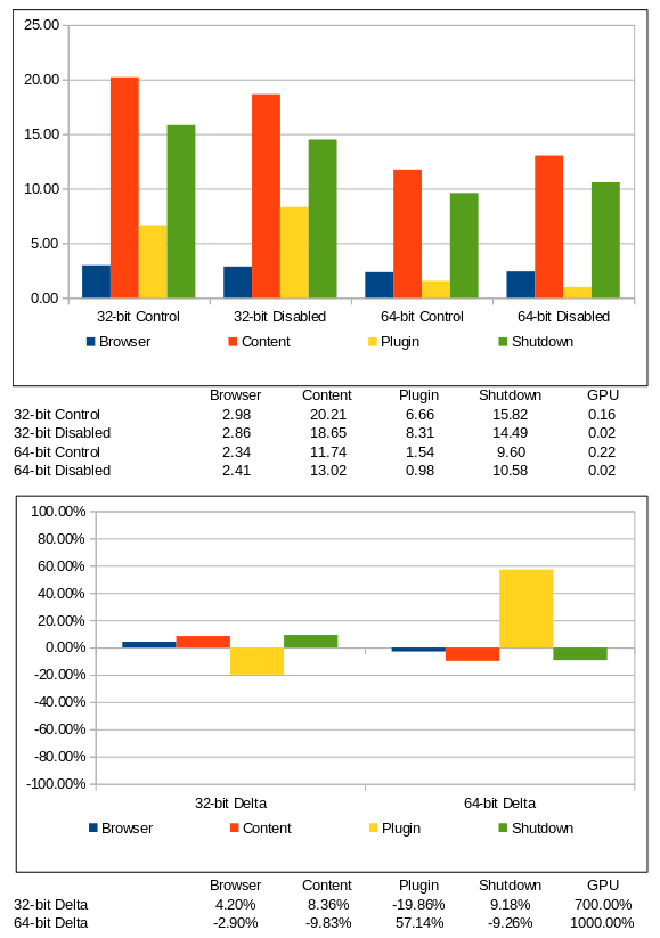

Breakdown by Architecture #

Breaking the data down by architecture we can see that overall 64-bit seems to be much more stable overall than 32-bit. 64-bit sees improvement across the board except for plugin process crashes which regress significantly. 32-bit sees an inverse effect albeit at a smaller scale.

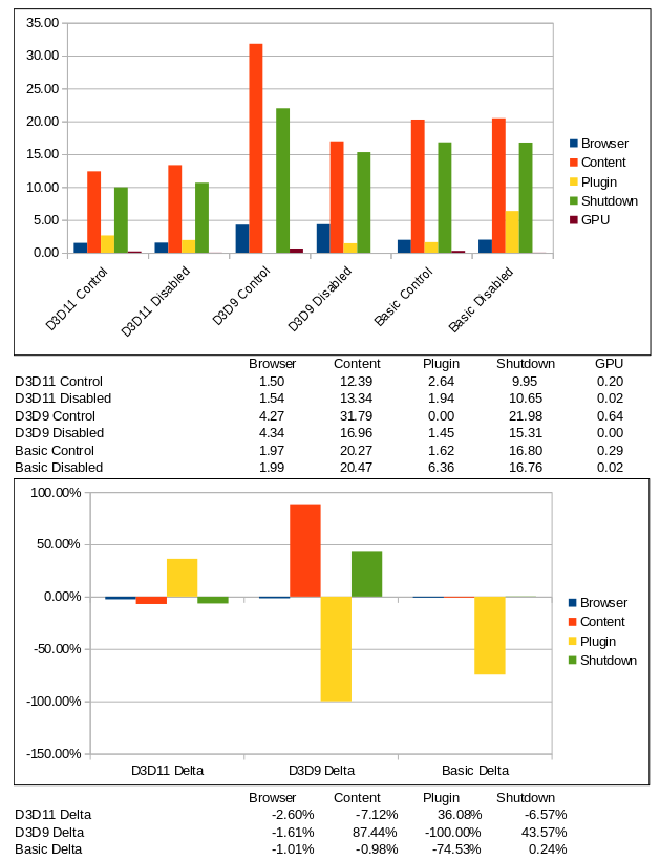

Breakdown by Compositor #

Taking things down to the compositor level we can see that D3D11 performs best overall, with or without the GPU Process but does seem to benefit from having it enabled. There is a significant 36% regression in Plugin process crashes though which needs to be looked at — we don’t see this with D3D9 nor Basic compositing. Looking at D3D9 itself seems to carry a regression as well in Content and Shutdown crashes. These are challenges we need to address and keep in mind as we determine what to support as we get closer to release.

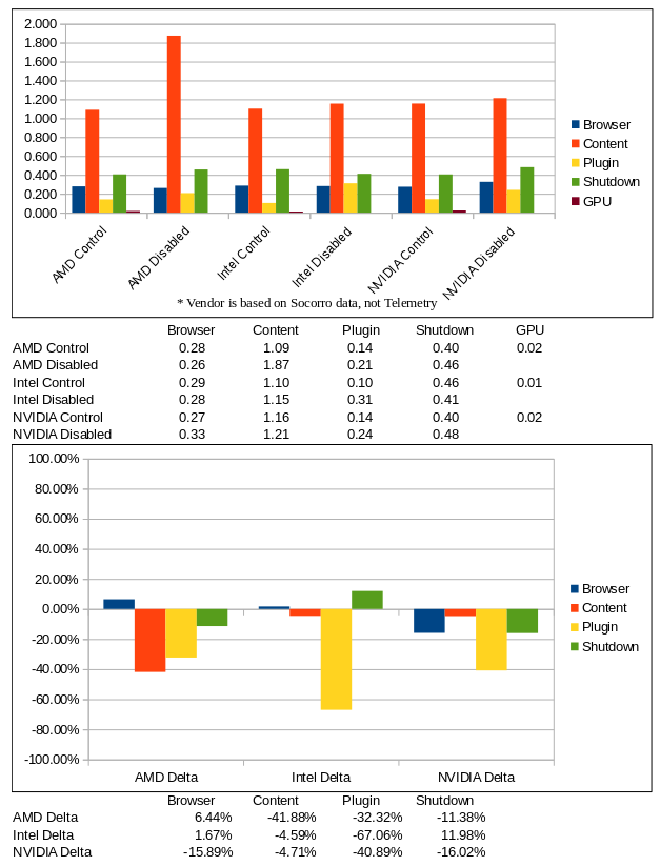

Breakdown by Graphics Card Vendor #

Looking at the data broken down by graphics card vendors there is significant improvement across the board from GPU Process with the only exception being a 6% regression in Browser crashes on AMD hardware and a 12% regression in Shutdown crashes on Intel hardware. However, considering this data comes from Socorro we cannot say that these results are universal. In other words, these are regressions in the number of crashes reported which do not necessarily map one-to-one to the number of crashes occurred.

Signature Comparison #

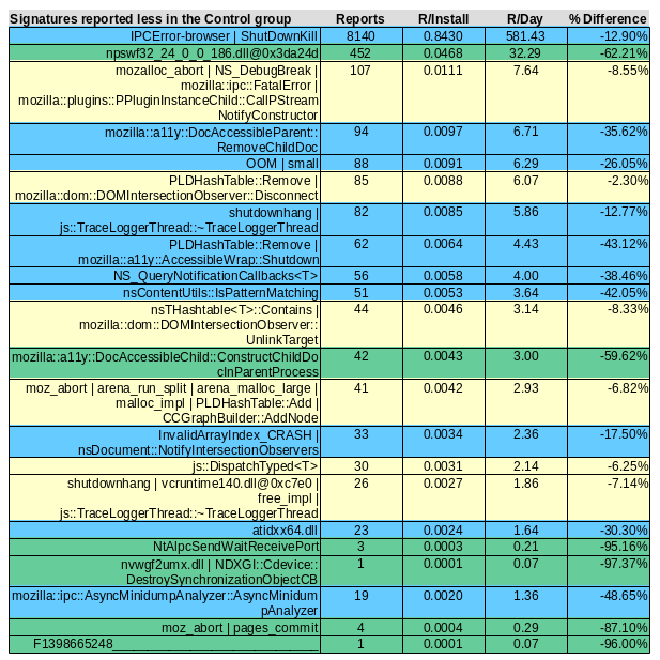

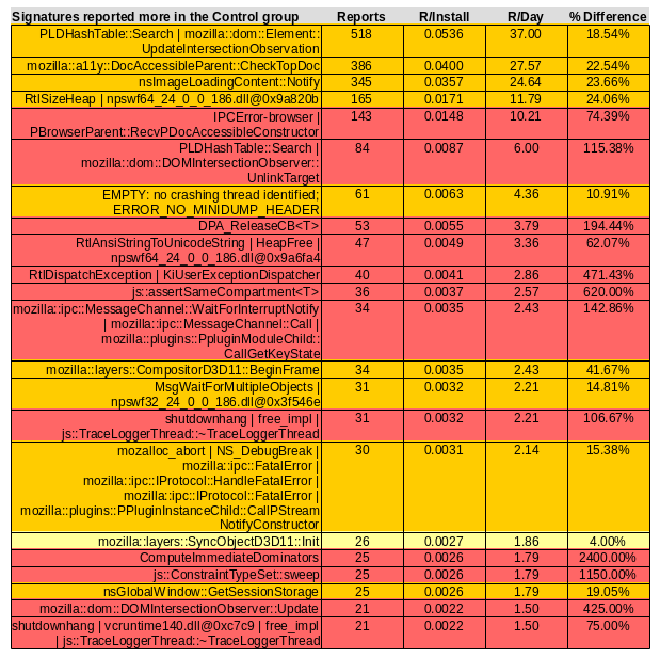

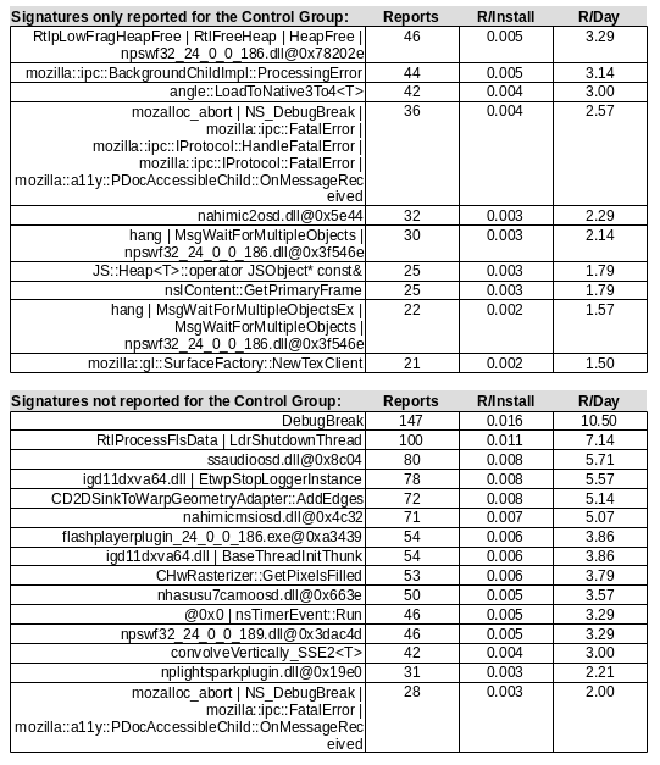

As a final comparison, since some of the numbers above varied quite a lot between the Control and Test groups, I wanted to look at the top signatures reported by these separate groups of users to see where they did and did not overlap.

This first table shows the signatures that saw the greatest improvement. In other words these crashes were much less likely to be reported in the Control group. Of note there are multiple signatures related to Adobe Flash and some related to graphics drivers in this list.

This next table shows the inverse, the crashes which were more likely to be reported with GPU Process enabled. Here we see a bunch of JS and DOM related signatures appearing more frequently.

These final tables break down the signatures that didn’t show up at all in either of the cohorts. The top table represents crashes which were only reported when GPU Process was enabled, while the second table are those which were only reported when GPU Process was disabled. Of note there are more signatures related to driver DLLs in the Disabled group and more Flash related signatures in the Enabled group.

Conclusions #

In this first attempt at a GPU process things are looking good — I wouldn’t say we’re release ready but it’s probably good enough to ship to a wider test audience. We were hoping that stability would be on-par overall with most GPU related crashes moving to the GPU Process and hopefully being much more recoverable (ie. not taking down the browser). The data seems to indicate this has happened with a 5% win overall. However this has come at a cost of a 5% regression in plugin stability and seems to perform worse under certain system configurations once you dig deeper into the data. These are concerns that will need to be evaluated.

It’s worth pointing out that this data comes from Nightly users, users who trend towards more modern hardware and more up to date software. We might see swings in either direction once this feature reaches a wider, more diverse population. In addition this experiment only hit half of those users who qualified to use GPU Process which, as it turns out, is only a few thousand users. Finally, this experiment only measured crash occurrences and not how gracefully GPU Process crashes — a critical factor that will need to be vetted before we release this feature to users who are less regression tolerant.

As I close out this post I want to take another moment to thank David Anderson, Ryan Hunt, and George Wright for all the work they’ve put in to making this first attempt at GPU Process. In the long run I think this has the potential to make Firefox a lot more stable and faster than previous Firefox versions but potentially the competition as well. It is a stepping stone to what David Bryant calls the “next-generation web platform”. I also want to thank Felipe Gomes and Jukka Jylänki for their help getting this experiment live. Both of them helped me work through some bugs, despite the All-hands in Hawaii and Christmas holidays that followed — without them this experiment might not have happened in time for the Firefox 53 merge to Aurora.

If you made it this far, thank you for reading. Feel free to leave me feedback or questions in the comments. If you think there’s something I’ve reported here that needs to be investigated further please let me know and I’ll file a bug report.

Sources #

- Mozilla Telemetry crash rate data by architecture, compositor, platform, process

- Vendor and Topcrash data courtesy Mozilla Socorro